OpenStack Series Chapter6: Using ReaR to back Up OpenStack | Word Count: 1.3k | Reading Time: 8mins |

Overview of ReaR Red Hat Enterprise Linux provides a recovery and system migration utility, Relax-and-Recover (ReaR).

ReaR is written in Bash and enables the distribution of rescue images or storage of backup files via different network transport methods.

ReaR produces a bootable image and restores from backup using this image.

ReaR can use the following protocols to transport files

HTTP/HTTPS

SSH/SCP

NFS

CIFS(SMB)

I use the ISO bootable file format and NFS protocol in this article.

Automate backup and restore by using ansible Ansible is the main method for creating and restoring images generated by ReaR.

We’ll use the built-in role called backup-and-restore in tripleo-ansible

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 (undercloud) [stack@director ~]$ tree /usr/share/ansible/roles/backup-and-restore /usr/share/ansible/roles/backup-and-restore ├── backup │ └── tasks │ ├── db_backup.yml │ ├── main.yml │ ├── service_manager_pause.yml │ └── service_manager_unpause.yml ├── defaults │ └── main.yml ├── meta │ └── main.yml ├── molecule │ └── default │ ├── Dockerfile │ ├── molecule.yml │ ├── playbook.yml │ └── prepare.yml ├── setup_nfs │ └── tasks │ └── main.yml ├── setup_rear │ └── tasks │ └── main.yml ├── tasks │ ├── main.yml │ ├── pacemaker_backup.yml │ ├── setup_nfs.yml │ └── setup_rear.yml ├── templates │ ├── exports.j2 │ ├── local.conf.j2 │ └── rescue.conf.j2 └── vars └── redhat.yml 13 directories, 20 files

Backing up the undercloud director lab has already prepared the environment, we just need to initialize the command.

1 2 3 4 5 6 [student@workstation ~]$ lab controlplane-backup start Setting up the Backup Node on utility: · Setting up NFS server on utility............................ SUCCESS · Creating playbooks for backup and restore on director....... SUCCESS

Preparing the NFS server Login to the undercloud—director node.

1 2 3 4 5 6 7 [student@workstation ~]$ ssh director Activate the web console with: systemctl enable --now cockpit.socket This system is not registered to Red Hat Insights. See https://cloud.redhat.com/ To register this system, run: insights-client --register Last login: Fri May 16 01:33:26 2025 from 172.25.250.9

Then, Check the inventory file.

1 2 3 (undercloud) [stack@director ~]$ cat nfs-inventory.ini [BACKUP_NODE] backup ansible_host=172.25.250.220 ansible_user=student ansible_become_password=student

Please notice that the IP address in the inventory file is the utility node.

1 2 3 4 5 6 7 8 9 10 (undercloud) [stack@director ~]$ ping utility -c 4 PING utility.lab.example.com (172.25.250.220) 56(84) bytes of data. 64 bytes from utility.lab.example.com (172.25.250.220): icmp_seq=1 ttl=64 time=0.675 ms 64 bytes from utility.lab.example.com (172.25.250.220): icmp_seq=2 ttl=64 time=0.833 ms 64 bytes from utility.lab.example.com (172.25.250.220): icmp_seq=3 ttl=64 time=0.227 ms 64 bytes from utility.lab.example.com (172.25.250.220): icmp_seq=4 ttl=64 time=0.253 ms --- utility.lab.example.com ping statistics --- 4 packets transmitted, 4 received, 0% packet loss, time 101ms rtt min/avg/max/mdev = 0.227/0.497/0.833/0.263 ms

Finally, use the ansible palybook to configure the utility server as a backup node.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 (undercloud) [stack@director ~]$ ansible-playbook -v -i ~/nfs-inventory.ini \ --extra="ansible_ssh_common_args='-o StrictHostKeyChecking=no'" \ --become --become-user root --tags bar_setup_nfs_server \ ~/bar_nfs_setup.yaml ...output ommited... TASK [backup-and-restore : Gather variables for each operating system] *********************** ok: [backup] => (item=/usr/share/ansible/roles/backup-and-restore/vars/redhat.yml) => {"ansible_facts": {"tripleo_backup_and_restore_nfs_packages": ["nfs-utils"], "tripleo_backup_and_restore_rear_packages": ["rear", "syslinux", "genisoimage", "nfs-utils"]}, "ansible_included_var_files": ["/usr/share/ansible/roles/backup-and-restore/vars/redhat.yml"], "ansible_loop_var": "item", "changed": false, "item": "/usr/share/ansible/roles/backup-and-restore/vars/redhat.yml"} TASK [backup-and-restore : Gather variables for each operating system] *********************** ok: [backup] => (item=/usr/share/ansible/roles/backup-and-restore/vars/redhat.yml) => {"ansible_facts": {"tripleo_backup_and_restore_nfs_packages": ["nfs-utils"], "tripleo_backup_and_restore_rear_packages": ["rear", "syslinux", "genisoimage", "nfs-utils"]}, "ansible_included_var_files": ["/usr/share/ansible/roles/backup-and-restore/vars/redhat.yml"], "ansible_loop_var": "item", "changed": false, "item": "/usr/share/ansible/roles/backup-and-restore/vars/redhat.yml"} PLAY RECAP *********************************************************************************** backup : ok=12 changed=6 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

Generating the host inventory Generate the inventory file.

1 2 3 (undercloud) [stack@director ~]$ tripleo-ansible-inventory \ --ansible_ssh_user heat-admin \ --static-yaml-inventory /home/stack/tripleo-inventory.yaml

Check the content in the inventory file.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 (undercloud) [stack@director ~]$ head -n 40 tripleo-inventory.yaml Undercloud: hosts: undercloud: {} vars: ansible_connection: local ansible_host: localhost ansible_python_interpreter: /usr/bin/python3 ansible_remote_tmp: /tmp/ansible-${USER} auth_url: https://172.25.249.201:13000 cacert: null os_auth_token: gAAAAABoJuImd0Fz8Kgv7oZnDDJQ4r824VkxXREFzUBzQwlheWNWlSRCLX1xFN3v5yi9UbF0YCVGGTnQPEOcPQHbyyhpM51j7CpDJB_DUQ8TAg7uNcaV2It4zgvjOJwckjL75A7u8i6K1pEYcb6IJeiv9-codODORSg9ToCKKG80BEOxS9zhUxs overcloud_admin_password: redhat overcloud_horizon_url: http://172.25.250.50:80/dashboard overcloud_keystone_url: http://172.25.250.50:5000 plan: overcloud plans: [overcloud] project_name: admin undercloud_service_list: [tripleo_nova_compute, tripleo_heat_engine, tripleo_ironic_conductor, tripleo_swift_container_server, tripleo_swift_object_server, tripleo_mistral_engine] undercloud_swift_url: https://172.25.249.201:13808/v1/AUTH_90ef963f6dca474ba045e212c7afaa97 username: admin Controller: children: overcloud_Controller: {} overcloud_Controller: hosts: controller0: {ansible_host: 172.25.249.56, canonical_hostname: controller0.overcloud.example.com, ctlplane_hostname: controller0.ctlplane.overcloud.example.com, ctlplane_ip: 172.25.249.56, deploy_server_id: 6d712d0a-cb71-4cdc-93dc-f2d69ffc520b, external_hostname: controller0.external.overcloud.example.com, external_ip: 172.25.250.1, internal_api_hostname: controller0.internalapi.overcloud.example.com, internal_api_ip: 172.24.1.1, management_hostname: controller0.management.overcloud.example.com, management_ip: 172.24.5.1, storage_hostname: controller0.storage.overcloud.example.com, storage_ip: 172.24.3.1, storage_mgmt_hostname: controller0.storagemgmt.overcloud.example.com, storage_mgmt_ip: 172.24.4.1, tenant_hostname: controller0.tenant.overcloud.example.com, tenant_ip: 172.24.2.1} vars: ansible_ssh_user: heat-admin bootstrap_server_id: 6d712d0a-cb71-4cdc-93dc-f2d69ffc520b serial: '1' tripleo_role_name: Controller ...output ommited...

Installing and configuring ReaR (on the director server) Whoever requires backing up should have this tool installed on them. As we need to back up the director, we ought to install and configure the tool on the director.

1 2 3 4 (undercloud) [stack@director ~]$ ansible-playbook -v -i ~/tripleo-inventory.yaml \ --extra="ansible_ssh_common_args='-o StrictHostKeyChecking=no'" \ --become --become-user root --tags bar_setup_rear \ ~/bar_rear_setup-undercloud.yaml

Installing and configuring ReaR (on the controller0 server) 1 2 3 4 5 (undercloud) [stack@director ~]$ ansible-playbook -v -i ~/tripleo-inventory.yaml \ > -e tripleo_backup_and_restore_exclude_paths_controller_non_bootrapnode=false \ > --extra="ansible_ssh_common_args='-o StrictHostKeyChecking=no'" \ > --become --become-user root --tags bar_setup_rear \ > ~/bar_rear_setup-controller.yaml

Backing up the undercloud - director The total time for backing up is about 20-30 mins.(Time based on the scale.)

1 2 3 4 (undercloud) [stack@director ~]$ ansible-playbook -v -i ~/tripleo-inventory.yaml \ > --extra="ansible_ssh_common_args='-o StrictHostKeyChecking=no'" \ > --become --become-user root --tags bar_create_recover_image \ > ~/bar_rear_create_restore_images-undercloud.yaml

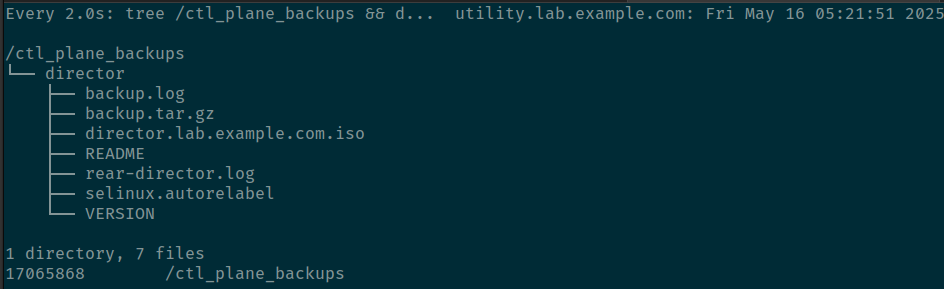

During the waiting period, we could open a new terminal to monitor/watch the changes on the /ctl_plane_backups(defined in the ansible playbook) directory.

Check the backup directory in utility 1 2 3 [student@workstation ~]$ showmount -e utility Export list for utility: /ctl_plane_backups 172.25.250.0/24

Creating the mount point 1 2 3 4 5 6 7 8 9 10 11 12 13 14 [student@workstation ~]$ sudo mkdir /mnt/ctl_plane_backups [student@workstation ~]$ sudo mount utility:/ctl_plane_backups \ > /mnt/ctl_plane_backups [student@workstation ~]$ sudo tree /mnt/ctl_plane_backups /mnt/ctl_plane_backups ├── director ├── backup.log ├── backup.tar.gz ├── director.lab.example.com.iso ├── README ├── rear-director.log ├── selinux.autorelabel └── VERSION 1 directories, 7 files

Making a tarball 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 [student@workstation ~]$ sudo tar tzvf \ > /mnt/ctl_plane_backups/director/backup.tar.gz drwxr-xr-x root/root 0 2020-10-30 11:30 lrwxrwxrwx root/root 0 2018-08-12 05:46 dr-xr-xr-x root/root 0 2020-10-30 10:37 drwx------ root/root 0 2018-08-12 05:31 drwxr-xr-x root/root 0 2020-08-12 11:51 drwx------ root/root 0 2020-08-12 11:52 -rwx------ root/root 182 2020-07-31 17:18 -rwx------ root/root 1162528 2020-07-31 17:18 ...output omitted... ./ bin -> usr/bin boot/ boot/efi/ boot/efi/EFI/ boot/efi/EFI/redhat/ boot/efi/EFI/redhat/BOOTX64.CSV boot/efi/EFI/redhat/mmx64.efi

This article is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License. Please attribute the original author and source when sharing.